Challenge Description

In the "High-level Human Interaction Recognition Challenge", contestants are expected to recognize ongoing human activities from continuous videos. The intention is to motivate researchers to explore the recognition of complex human activities from continuous videos, taken in realistic settings. Each video contains several human-human interactions (e.g. hand shaking) occurring sequentially and/or concurrently. The contestants must correctly annotate which activity is occurring when and where for all videos. Irrelevant pedestrians are also present in some videos. Accurate detection and localization of human activities are required, instead of a brute force classification of videos.

Types of Activities in the Interaction Challenge

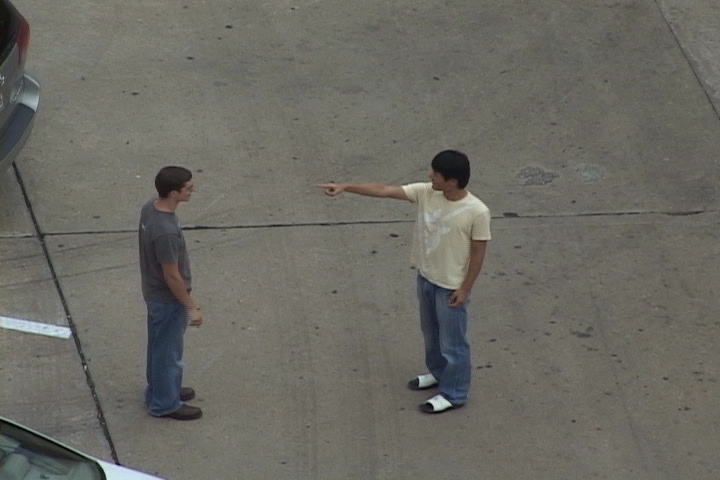

Hand Shaking Hugging Kicking Pointing Punching Pushing

Dataset

The UT-Interaction dataset contains videos of continuous executions of 6 classes of human-human interactions: shake-hands, point, hug, push, kick and punch. Ground truth labels for these interactions are provided, including time intervals and bounding boxes. There is a total of 20 video sequences whose lengths are around 1 minute. Each video contains at least one execution per interaction, providing us 8 executions of human activities per video on average. Several participants with more than 15 different clothing conditions appear in the videos. The videos are taken with the resolution of 720*480, 30fps, and the height of a person in the video is about 200 pixels.

We divide videos into two sets. The set 1 is composed of 10 video sequences taken on a parking lot. The videos of the set 1 are taken with slightly different zoom rate, and their backgrounds are mostly static with little camera jitter. The set 2 (i.e. the other 10 sequences) are taken on a lawn in a windy day. Background is moving slightly (e.g. tree moves), and they contain more camera jitters. From sequences 1 to 4 and from 11 to 13, only two interacting persons appear in the scene. From sequences 5 to 8 and from 14 to 17, both interacting persons and pedestrians are present in the scene. In sets 9, 10, 18, 19, and 20, several pairs of interacting persons execute the activities simultaneously. Each set has a different background, scale, and illumination.

Full dataset is available here: set1

set2

ground_truths

set1_segmented

set2_segmented

High-level Human Interaction Sample Videos

Sample zip file

Performance Evaluation Methodology

The performance of the systems created by contest participants must be evaluated using 10-fold leave-one-out cross validation per set. That is, for each set, we leave one among 10 sequences for the testing and use the other 9 for the training. Participants must measure the performance 10 times while changing the test set iteratively, finding the average performance.

For each set, we have provided 60 activity executions that will be used for the training and testing. The performance must measured using the selected 60 activity executions. The other executions, marked as 'others' in our dataset, must not be used for the evaluation.

We want participants to evaluate their systems with two different experimental settings: classification and detection. For the 'classification', participants are expected to use 120 video segments cropped based on the ground truth. The performance of classifying a testing video segment into its correct category must be measured. In the 'detection' setting, the activity recognition is measured to be correct if and only if the system correctly annotates an occurring activity's time interval (i.e. a pair of starting time and ending time) and its spatial bounding box. If the annotation overlaps with the ground truth more than 50% spatially and temporally, the detection is treated as a true positive. Otherwise, it is treated as a false positive. Contestants are expected to submit Precision-recall curves based on the average true positive rate and the false positive rate.

Citation

If you make use of the UT-Interaction dataset in any form, please cite the following reference.

@misc{UT-Interaction-Data,

author = "Ryoo, M. S. and Aggarwal, J. K.",

title = "{UT}-{I}nteraction {D}ataset, {ICPR} contest on {S}emantic {D}escription of {H}uman {A}ctivities ({SDHA})",

year = "2010",

howpublished = "http://cvrc.ece.utexas.edu/SDHA2010/Human\_Interaction.html"

}

In addition, the previous version of this dataset (i.e. set 1) was introduced in the following paper. You may want to check this paper, if you are interested in its results.

@inproceedings{ryoo09,

author = "Ryoo, M. S. and Aggarwal, J. K.",

title = "Spatio-Temporal Relationship Match: Video Structure Comparison for Recognition of Complex Human Activities",

booktitle = "IEEE International Conference on Computer Vision (ICCV)",

year = "2009",

location = "Kyoto, Japan",

}